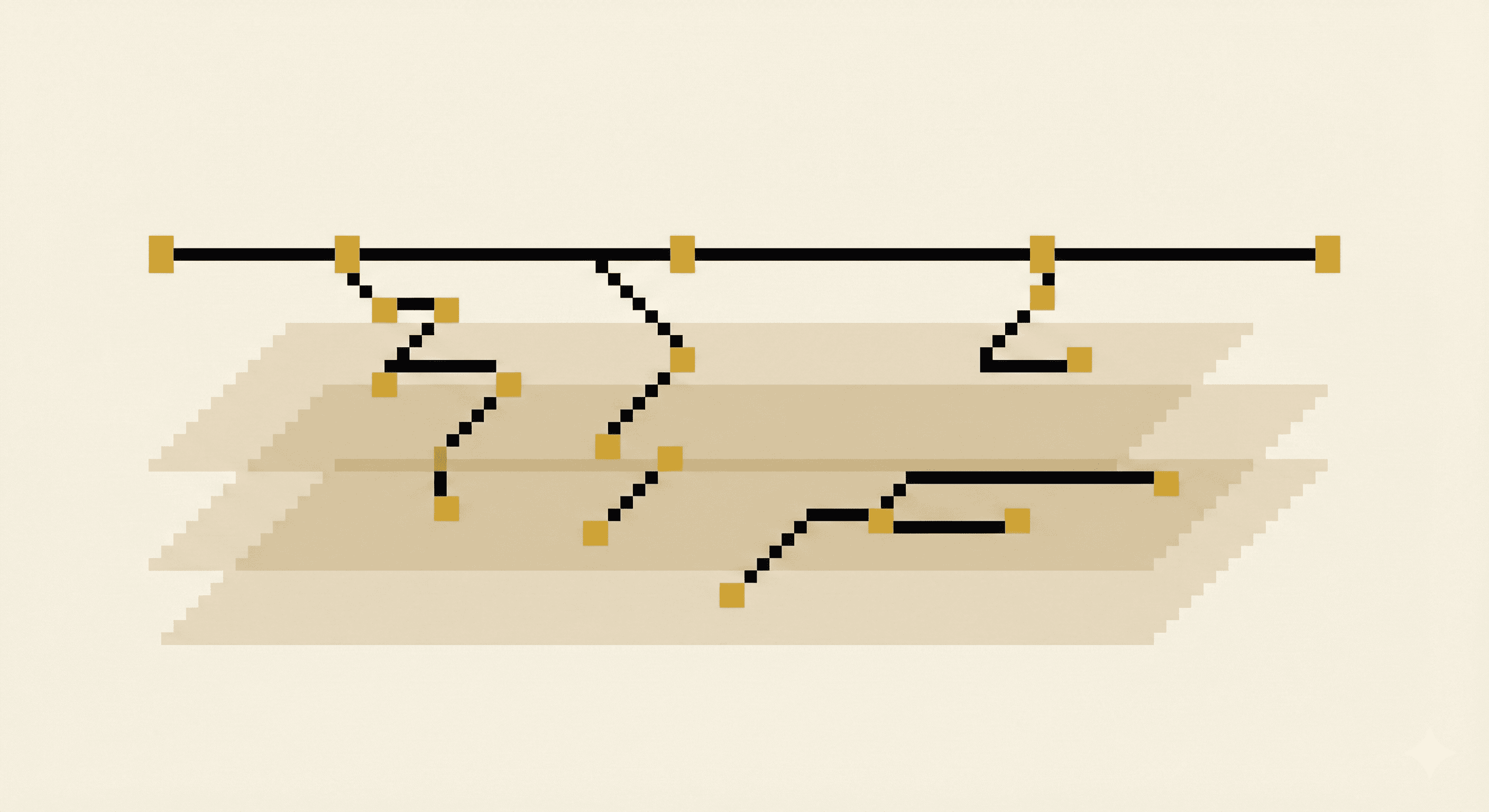

Flow node

Why we are moving

We have been building AI agents for a little more than a year now. The short version is that they work, and often they work surprisingly well.

But when we ask them to handle larger or more complicated tasks that span multiple systems or multiple steps, we run into the same issue over and over. Either we burn through a huge number of tokens to keep everything in context, or the agent starts to lose track of the actual goal.

Flow node

The speed trap

Like many teams, we started with agents that were focused on one thing: speed. Instead of broad, multi-step systems, we built very lightweight agents. Sometimes just one agent. Sometimes a small orchestrated chain. Each one had a narrow responsibility. It would call an internal API, fetch what it needed, and return an answer in a few seconds. It looked good in demos and was easy for stakeholders to understand.

This works fine for quick lookups. But it does not scale to work that looks more like the things we already manage in Jira. Real engineering tasks come with Epics, user stories, dependencies and the expectation that, by the end of the day, something gets completed because we had a plan and a task list.

Agents need that same kind of structure once the work stops being trivial.

Flow node

From chat windows to async work

During a sprint we hand someone a task and wait for the handoff. They update the board when something ships. Nobody is camped next to their keyboard narrating every thought.

With agents we do the opposite. We hover over a chat window expecting instant answers, even though the work itself involves multiple steps. Most of those setups still follow a 1 input -> 1 output pattern because they were built to live inside chat.

What we actually need looks more like 10 inputs -> 10 outputs, delivered with the same kind of structure you see in Jira or Linear. You cannot get there with shallow, one-shot agents that never build context beyond a single reply.

Flow node

The shift toward deep agents

None of this is a new idea. We started carving out this structure on client work, then noticed LangChain had already published a strong write-up and open-sourced the reference pattern.

The same direction shows up inside our own work. We are not trying to stack more agents together. We just stopped treating them like single-task utilities.

Human teams split responsibilities because attention is finite. Architect, engineer, designer, product owner. Each one watches a slice of the problem.

LLMs can technically adopt each of those personas, but without structure the context window fills up with noise and the output gets muddy.

Flow node

Planner, researcher, executor

So we split the architecture into three roles. The planner breaks the job into clear, reviewable units of work and tracks progress.

The researcher pulls documentation, code, thread history, and reduces it to the context needed for the next move.

The executor handles the current item, ships the artifact, and moves on without dragging every research note around.

LangChain calls the stack a Deep Agent. Tools like Cursor, Claude Code, and Manus keep showing similar mechanics.

Flow node

What matters now

Our own builds keep landing in the same place: once you stop optimizing for speed at all costs, depth and structure matter more than shaving another two seconds off a response.

We are packaging this pattern into our internal delivery stack now and will share more once the first partner deployment is live. If you want to see the open reference we cross-check against, LangChain's repository is public and constantly updated.

Next up we'll publish the concrete diagrams from those deployments, so if you're curious about how the planner, researcher, and executor behave in practice, stay tuned for the next post.